Long story short: If you start jobs in Jenkins, which in turn start docker processes (docker run commands), when you want to abort a job like this from the Jenkins UI, the job is aborted only in the UI, because Jenkins does not know how to stop the docker process running on the slave machines. This will cause unexpected problems in most cases (data being modified by the zombie process).

How to tackle this issue?

Option 1: using container names

I personally like to use the job name as the container name. The strategy is to build the docker process run so that, before the main docker run command, we attempt to delete any container with the same name, e.g.:

# remove the existing container

docker rm -f $JOB_NAME

docker run --name $JOB_NAME ...

At this point we make sure that only one docker container with that name is running at one point in time. However, the cleanup is done only when a new job is started.

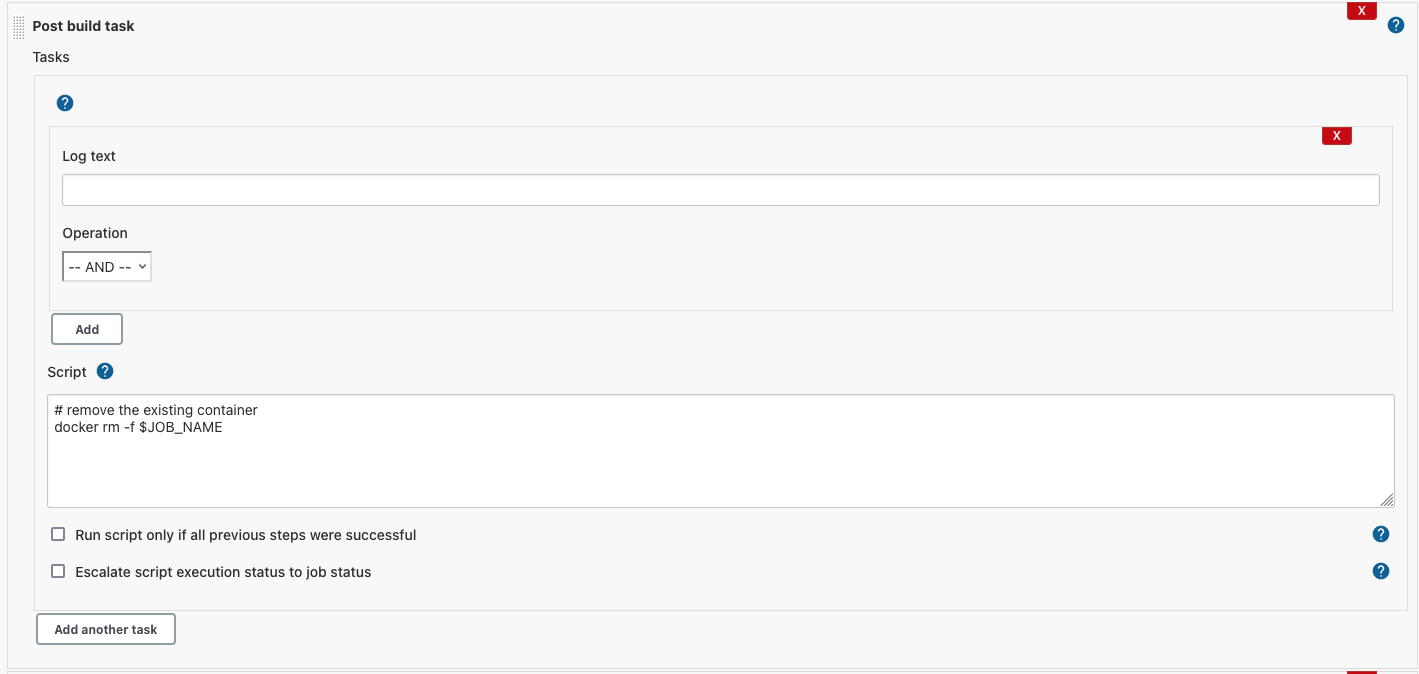

Option 2: post build task plugin

This is basically suggesting to use the Post Build Task Plugin and to run the docker rm -f $JOB_NAME command using this plugin. Starting the docker container with a predefined name is still required. Configuration should look like this:

I personally like to use a combination of the 2 options, doing cleanup before and after the jobs.